View on QuantumAI View on QuantumAI

|

Run in Google Colab Run in Google Colab

|

View source on GitHub View source on GitHub

|

|

Use precomputed optimal angles to measure the expected value of \(\langle C \rangle\) across a variety of problem types, sizes, \(p\)-depth, and random instances.

Setup

Install the ReCirq package:

try:

import recirq

except ImportError:

!pip install git+https://github.com/quantumlib/ReCirq

Now import Cirq, ReCirq and the module dependencies:

import recirq

import cirq

import numpy as np

import pandas as pd

Load the raw data

Go through each record, load in supporting objects, flatten everything into records, and put into a massive dataframe.

from recirq.qaoa.experiments.precomputed_execution_tasks import \

DEFAULT_BASE_DIR, DEFAULT_PROBLEM_GENERATION_BASE_DIR, DEFAULT_PRECOMPUTATION_BASE_DIR

records = []

for record in recirq.iterload_records(dataset_id="2020-03-tutorial", base_dir=DEFAULT_BASE_DIR):

dc_task = record['task']

apre_task = dc_task.precomputation_task

pgen_task = apre_task.generation_task

problem = recirq.load(pgen_task, base_dir=DEFAULT_PROBLEM_GENERATION_BASE_DIR)['problem']

record['problem'] = problem.graph

record['problem_type'] = problem.__class__.__name__

record['optimum'] = recirq.load(apre_task, base_dir=DEFAULT_PRECOMPUTATION_BASE_DIR)['optimum']

record['bitstrings'] = record['bitstrings'].bits

recirq.flatten_dataclass_into_record(record, 'task')

recirq.flatten_dataclass_into_record(record, 'precomputation_task')

recirq.flatten_dataclass_into_record(record, 'generation_task')

recirq.flatten_dataclass_into_record(record, 'optimum')

records.append(record)

df_raw = pd.DataFrame(records)

df_raw['timestamp'] = pd.to_datetime(df_raw['timestamp'])

df_raw.head()

Narrow down to relevant data

Drop unnecessary metadata and use bitstrings to compute the expected value of the energy. In general, it's better to save the raw data and lots of metadata so we can use it if it becomes necessary in the future.

from recirq.qaoa.simulation import hamiltonian_objectives, hamiltonian_objective_avg_and_err

import cirq_google as cg

def compute_energy_w_err(row):

permutation = []

for i, q in enumerate(row['qubits']):

fi = row['final_qubits'].index(q)

permutation.append(fi)

energy, err = hamiltonian_objective_avg_and_err(row['bitstrings'], row['problem'], permutation)

return pd.Series([energy, err], index=['energy', 'err'])

# Start cleaning up the raw data

df = df_raw.copy()

# Don't need these columns for present analysis

df = df.drop(['gammas', 'betas', 'circuit', 'violation_indices',

'precomputation_task.dataset_id',

'generation_task.dataset_id',

'generation_task.device_name'], axis=1)

# p is specified twice (from a parameter and from optimum)

assert (df['optimum.p'] == df['p']).all()

df = df.drop('optimum.p', axis=1)

# Compute energies

df = df.join(df.apply(compute_energy_w_err, axis=1))

df = df.drop(['bitstrings', 'qubits', 'final_qubits', 'problem'], axis=1)

# Normalize

df['energy_ratio'] = df['energy'] / df['min_c']

df['err_ratio'] = df['err'] * np.abs(1/df['min_c'])

df['f_val_ratio'] = df['f_val'] / df['min_c']

df

Plots

%matplotlib inline

from matplotlib import pyplot as plt

import seaborn as sns

sns.set_style('ticks')

plt.rc('axes', labelsize=16, titlesize=16)

plt.rc('xtick', labelsize=14)

plt.rc('ytick', labelsize=14)

plt.rc('legend', fontsize=14, title_fontsize=16)

# theme colors

QBLUE = '#1967d2'

QRED = '#ea4335ff'

QGOLD = '#fbbc05ff'

QGREEN = '#34a853ff'

QGOLD2 = '#ffca28'

QBLUE2 = '#1e88e5'

C = r'\langle C \rangle'

CMIN = r'C_\mathrm{min}'

COVERCMIN = f'${C}/{CMIN}$'

def percentile(n):

def percentile_(x):

return np.nanpercentile(x, n)

percentile_.__name__ = 'percentile_%s' % n

return percentile_

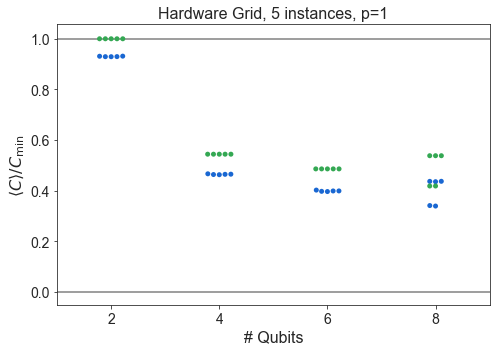

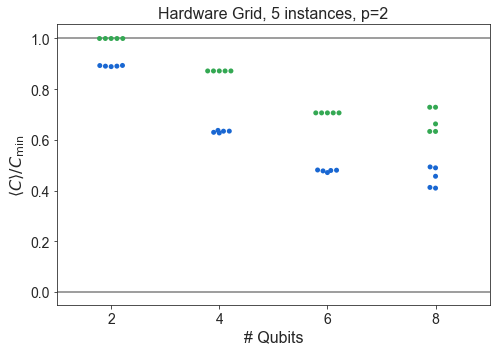

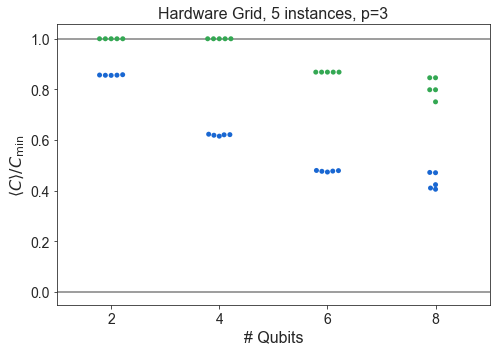

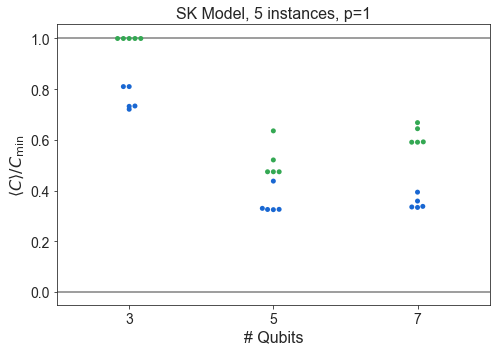

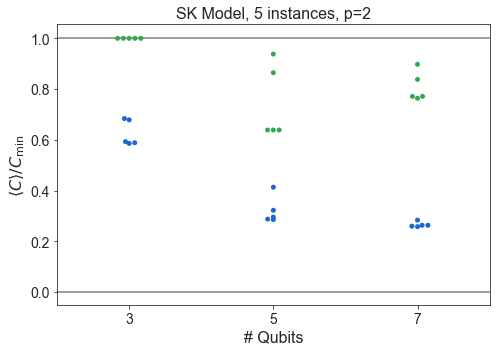

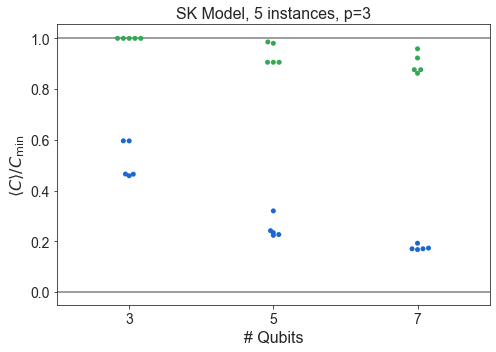

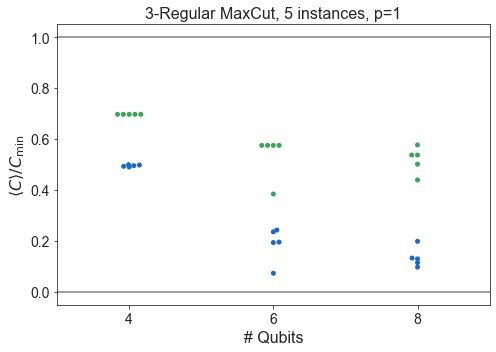

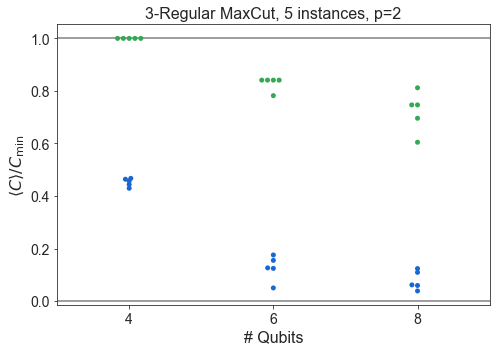

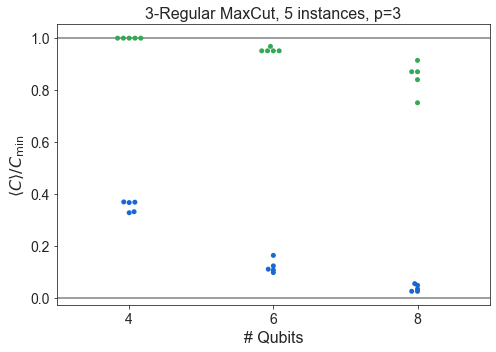

Raw swarm plots of all data

import numpy as np

from matplotlib import pyplot as plt

pretty_problem = {

'HardwareGridProblem': 'Hardware Grid',

'SKProblem': 'SK Model',

'ThreeRegularProblem': '3-Regular MaxCut'

}

for problem_type in ['HardwareGridProblem', 'SKProblem', 'ThreeRegularProblem']:

df1 = df

df1 = df1[df1['problem_type'] == problem_type]

for p in sorted(df1['p'].unique()):

dfb = df1

dfb = dfb[dfb['p'] == p]

dfb = dfb.sort_values(by='n_qubits')

plt.subplots(figsize=(7,5))

n_instances = dfb.groupby('n_qubits').count()['energy_ratio'].unique()

if len(n_instances) == 1:

n_instances = n_instances[0]

label = f'{n_instances}'

else:

label = f'{min(n_instances)} - {max(n_instances)}'

#sns.boxplot(dfb['n_qubits'], dfb['energy_ratio'], color=QBLUE, saturation=1)

#sns.boxplot(dfb['n_qubits'], dfb['f_val_ratio'], color=QGREEN, saturation=1)

sns.swarmplot(x=dfb['n_qubits'], y=dfb['energy_ratio'], color=QBLUE)

sns.swarmplot(x=dfb['n_qubits'], y=dfb['f_val_ratio'], color=QGREEN)

plt.axhline(1, color='grey', ls='-')

plt.axhline(0, color='grey', ls='-')

plt.title(f'{pretty_problem[problem_type]}, {label} instances, p={p}')

plt.xlabel('# Qubits')

plt.ylabel(COVERCMIN)

plt.tight_layout()

plt.show()

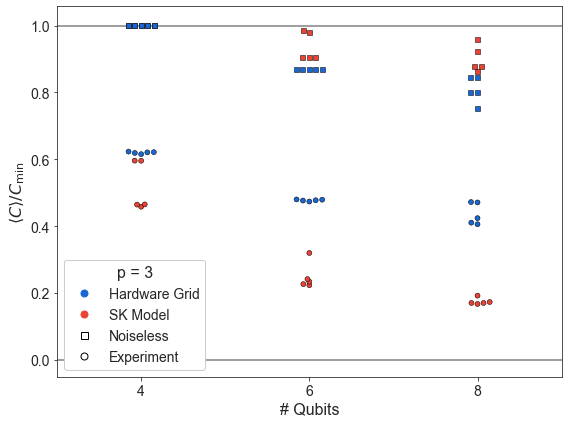

Compare SK and hardware grid vs. n

pretty_problem = {

'HardwareGridProblem': 'Hardware Grid',

'SKProblem': 'SK Model',

'ThreeRegularProblem': '3-Regular MaxCut'

}

df1 = df

df1 = df1[

((df1['problem_type'] == 'SKProblem') & (df1['p'] == 3))

| ((df1['problem_type'] == 'HardwareGridProblem') & (df1['p'] == 3))

]

df1 = df1.sort_values(by='n_qubits')

MINQ = 3

df1 = df1[df1['n_qubits'] >= MINQ]

plt.subplots(figsize=(8, 6))

plt.xlim((8, 23))

# SK

dfb = df1

dfb = dfb[dfb['problem_type'] == 'SKProblem']

sns.swarmplot(x=dfb['n_qubits'], y=dfb['energy_ratio'], s=5, linewidth=0.5, edgecolor='k', color=QRED)

sns.swarmplot(x=dfb['n_qubits'], y=dfb['f_val_ratio'], s=5, linewidth=0.5, edgecolor='k', color=QRED,

marker='s')

dfg = dfb.groupby('n_qubits').mean().reset_index()

# --------

# Hardware

dfb = df1

dfb = dfb[dfb['problem_type'] == 'HardwareGridProblem']

sns.swarmplot(x=dfb['n_qubits'], y=dfb['energy_ratio'], s=5, linewidth=0.5, edgecolor='k', color=QBLUE)

sns.swarmplot(x=dfb['n_qubits'], y=dfb['f_val_ratio'], s=5, linewidth=0.5, edgecolor='k', color=QBLUE,

marker='s')

dfg = dfb.groupby('n_qubits').mean().reset_index()

# -------

plt.axhline(1, color='grey', ls='-')

plt.axhline(0, color='grey', ls='-')

plt.xlabel('# Qubits')

plt.ylabel(COVERCMIN)

from matplotlib.patches import Patch

from matplotlib.lines import Line2D

from matplotlib.legend_handler import HandlerTuple

lelements = [

Line2D([0], [0], color=QBLUE, marker='o', ms=7, ls='', ),

Line2D([0], [0], color=QRED, marker='o', ms=7, ls='', ),

Line2D([0], [0], color='k', marker='s', ms=7, ls='', markerfacecolor='none'),

Line2D([0], [0], color='k', marker='o', ms=7, ls='', markerfacecolor='none'),

]

plt.legend(lelements, ['Hardware Grid', 'SK Model', 'Noiseless', 'Experiment', ], loc='best',

title=f'p = 3',

handler_map={tuple: HandlerTuple(ndivide=None)}, framealpha=1.0)

plt.tight_layout()

plt.show()

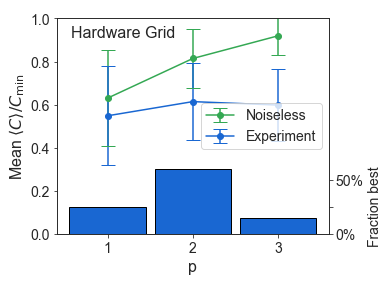

Hardware grid vs. p

dfb = df

dfb = dfb[dfb['problem_type'] == 'HardwareGridProblem']

dfb = dfb[['p', 'instance_i', 'n_qubits', 'energy_ratio', 'f_val_ratio']]

P_LIMIT = max(dfb['p'])

def max_over_p(group):

i = group['energy_ratio'].idxmax()

return group.loc[i][['energy_ratio', 'p']]

def count_p(group):

new = {}

for i, c in enumerate(np.bincount(group['p'], minlength=P_LIMIT+1)):

if i == 0:

continue

new[f'p{i}'] = c

return pd.Series(new)

dfgy = dfb.groupby(['n_qubits', 'instance_i']).apply(max_over_p).reset_index()

dfgz = dfgy.groupby(['n_qubits']).apply(count_p).reset_index()

# In the paper, we restrict to n > 10

# dfgz = dfgz[dfgz['n_qubits'] > 10]

dfgz = dfgz.set_index('n_qubits').sum(axis=0)

dfgz /= (dfgz.sum())

dfgz

p1 0.25 p2 0.60 p3 0.15 dtype: float64

dfb = df

dfb = dfb[dfb['problem_type'] == 'HardwareGridProblem']

dfb = dfb[['p', 'instance_i', 'n_qubits', 'energy_ratio', 'f_val_ratio']]

# In the paper, we restrict to n > 10

# dfb = dfb[dfb['n_qubits'] > 10]

dfg = dfb.groupby('p').agg(['median', percentile(25), percentile(75), 'mean', 'std']).reset_index()

plt.subplots(figsize=(5.5,4))

plt.errorbar(x=dfg['p'], y=dfg['f_val_ratio', 'mean'],

yerr=(dfg['f_val_ratio', 'std'],

dfg['f_val_ratio', 'std']),

fmt='o-',

capsize=7,

color=QGREEN,

label='Noiseless'

)

plt.errorbar(x=dfg['p'], y=dfg['energy_ratio', 'mean'],

yerr=(dfg['energy_ratio', 'std'],

dfg['energy_ratio', 'std']),

fmt='o-',

capsize=7,

color=QBLUE,

label='Experiment'

)

plt.xlabel('p')

plt.ylabel('Mean ' + COVERCMIN)

plt.ylim((0, 1))

plt.text(0.05, 0.9, r'Hardware Grid', fontsize=16, transform=plt.gca().transAxes, ha='left', va='bottom')

plt.legend(loc='center right')

ax2 = plt.gca().twinx() # instantiate a second axes that shares the same x-axis

dfgz_p = [int(s[1:]) for s in dfgz.index]

dfgz_y = dfgz.values

ax2.bar(dfgz_p, dfgz_y, color=QBLUE, width=0.9, lw=1, ec='k')

ax2.tick_params(axis='y')

ax2.set_ylim((0, 2))

ax2.set_yticks([0, 0.25, 0.50])

ax2.set_yticklabels(['0%', None, '50%'])

ax2.set_ylabel('Fraction best' + ' ' * 41, fontsize=14)

plt.tight_layout()